There are 2 tools to utilize search engine:

– the Harvester using the terminal

– Maltego

Using the Harvester through the terminal will only give you information that is leaked or available on the search engine. To use this tool is very easy, open the terminal on your kali linux, and type theharvester or theHarvester depends on your version of kali linux. -d is the domain that you want to search -l is the amount of data you want your terminal to show -b is the search engine you want to use.

For example: theHarvester -d facebook.com -l 100 -b bing

When you enter the command, it will show you the results it can find with a maximum amount of 100 results.

Another tool is using maltego. Maltego is an application that is available on kali linux. Before using this application, you must register yourself and log in to use the tools in maltego. After you log yourself in, you will see many tools that are available for you to use. But for finding data behind a website or a domain, you can just click new graph, and press what you want to find trace of, if you want to find information traces from a domain, you can choose domain, name the domain you want to find, and then click transform. Wait for a while until all the data are showed, it will be showed in the form of trees and branches. Something like this:

If you want to find a data about another person, you can! But you have to log in to your twitter first, after you are done with logging in to your twitter account, maltego will find the data related to the person you are searching for.

Author Archives: 2201807791vicky

Footprinting Tools

There are several footprinting tools available in kali linux, such as:

– Whois using the terminal

– Host command

– Samspade

– Greenwich

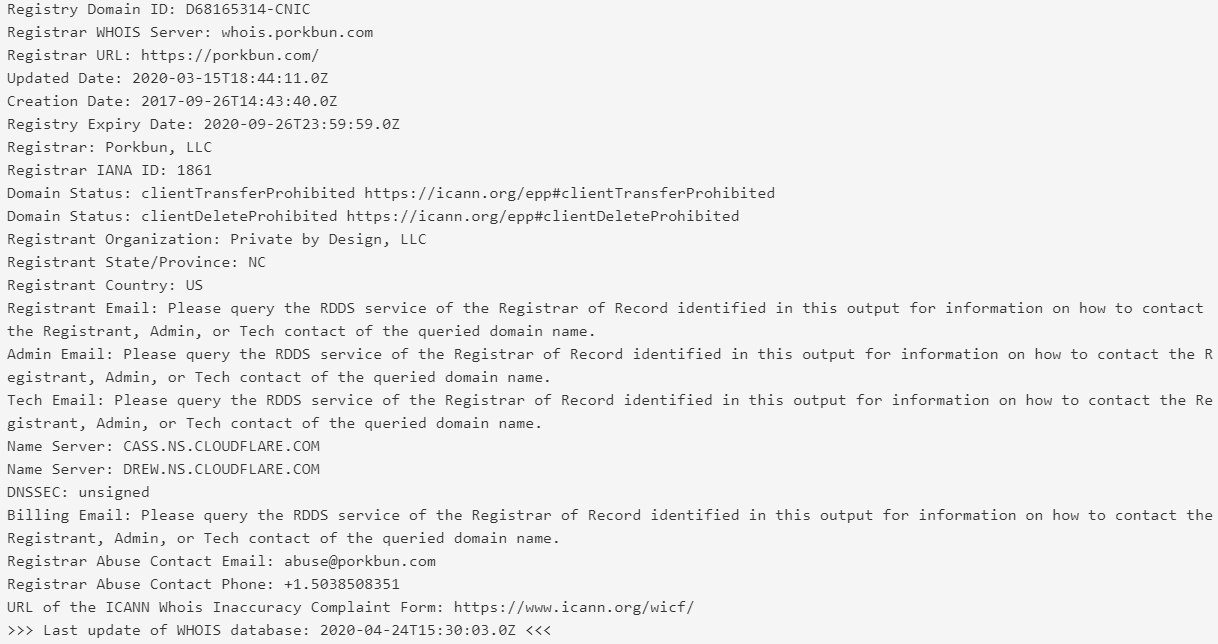

The purpose of footprinting tools is to gather data which is left exposed by the internet, information such as the owner of the domain, the server name, location address, email address could be exposed if the website is not secure enough to leave these prints. Some of these tools are not only available in kali linux, there are many website available for footprinting tools.

For example: using whois lookup

or using censys.io

Other than that, there is also a tool to track the DNS (Domain Name Sevice), such as:

– dnsenum

– dig

Meet In The Middle

Meet in the middle is a type of attack that will attack the network. When someone use this tool, they will be able to track the network traffic of their own computer or their local network, or any kind of network. This attack is dangerous due to the fact that it can intercept the traffic of the network. It means that, every data sent through the network can be tracked and be read by this, especially when logging in or signing up to a website.

One of the tools used for this attack is Burp Suite. Burp Suite is a tool that can intercept the network on your computer. In kali Linux, this tool is already available and ready to use.

When you already open the burp suite, click the proxy and turn on the intercept, and then click the option, which will show the default proxy listener which is 127.0.0.1:8000

Using the firefox in kali Linux, go to the preference and search for proxy, click the option and turn on manual proxy and write the default proxy listener that you see on your burp suite.

After you change the proxy into the manual one, try to go to any website. You will not be able to access the page, you need to download the certificate of burp suite since the proxy is not detected in any of the default certificate in the browser. To download the certificate, go to http://burp and you can see a little writing “CA Certificate”, when you click on that you can download the certificate. And then go to the browser again, go to the preference and search for certificate, then add your downloaded certificate there.

If you load the website you failed to access earlier, you will be able now. And if you go back to burp suite, it will show you your network traffic. You will have to be extremely careful when you enter your username and password, because the traffic can show the data and other people can see them!

If you notice, beside the search engine of the firefox, there will be a green lock, and you can view the certification there. It is not authorized so it will give a warning. And the name of the verified by will be different from the normal one. If you want to make it looks like it is a legitimate certification, you can make the certification by yourself and edit the name of the publisher and other thing.

week 7

This week’s topic is about apriori. Apriori is an algorithm to find out what is the most favorite pair of bought item in a market.

This apriori algorithm will calculate from the list of bought items, which pair is bought the most. The purpose is to organize the market items order. For example, one will buy butter and bread at the same time, or maybe with milk for breakfast. The market will try to organize those things together so it will be easier for the customer to buy and pick those things.

The way to the algorithm work is quite similar to the naivebayes, however, instead of finding the probability, this is finding the pair with the most probability of people buying together. The algorithm will have to count the percentage of each item, and eliminate the one the item with less than minimum support percentage, and group them with the other item. It will loop until the last group of items do not have any with more than the minimum support percentage. After that, calculate the confidentiality of the group of item(s).

week 6

This week lesson is about learning from observation. More like about clustering information. Clustering can depends on various information, gender, occupation, heredity(biologically), or how they look(species).

For an information, there can be multiple cluster, but which information belong to which cluster need to be calculated. To calculate which information belong to which cluster, in a graph, the calculation for each information to the main cluster need to be calculated. And the every information will be closer to one cluster than the other. The way the distance is calculated is using euclidean or manhattan method.

After calculating the distance of information to cluster(s), finding the mean coordinates of the cluster is necessary. And then recalculating the distance again, until the coordinates of the cluster is not changing nor the information related to the cluster.

As for the project, further discussion have not yet been held.

week 5

This week’s lecture is about uncertainty reasoning. It is part of learning about machine learning.

There are 3 types of machine learning:

– supervised learning

– unsupervised learning

– reinforcement learning

The difference of these types is, supervised means that the classification and learning are supervised by the maker, the maker will help with identifying the things showed to the machine.

Unsupervised means that they will be showed things and will move them in cluster by the similarity of their characteristics.

Reinforcement learning is learning by rewards, they will learn to know whether it is right or wrong by reward and will try to get the reward.

There is also a need for reason probability, to make a rational decision making depending on the situation. There is several rule to probability theory, such as: bayes and naïve bayes.

As for the project, due to our situation of unable to meet with each other, further discussion for the project has not been held.

week 4

This week’s lecture is about adversarial search, which the main topic for the lecture is about minimax. Which is usually used for an automated computer playing games to search for the which move should they do next.

The concept of this search is similar to Depth First Search (DFS), the tree will have an interchanging minimum and maximum, which represent the interchanging player after player 1 have their turns and player 2 will move next. The purpose of the min and max is to make sure that the 2 players have different choice of options depending on what the previous player moves.

This automated game is usually for 2D games such as, chess, go, tic tac toe, et cetera.

As for the project, we are sure to say that we are going to use tensorflow, however, what kind of projects we are going to make is still

week 3

This week session’s is about informed search, the opposite from last week’s, and also local search. Such as A* and greedy Best First Search (BFS), and the only part that I remember from local search is the genetic algorithm.

The difference of uninformed and informed search is the availability of heuristic value. For instance, on the road, our travel distance and convenience are calculated, the heuristic value is the rate of traffic. In greedy BFS, the algorithm will only calculate base on the traffic rate and will take the lower traffic rate path. However, in A* algorithm, it will calculate both the distance and the traffic rate to reach the destination. A* algorithm will give the best solution for the result as it calculate every information that it has.

As for the genetic algorithm, it take the example of genetic mutation for survival of the fittest. It will take the best gene for survival, the same as the algorithm. It will try to find the best combination of genes that will have the best result.

For the project, we are still deciding about what kind of algorithm we should use for this project, so as of now, we are still finalizing our idea for this project and there has been no progress.

week 2

This week’s session is about uninformed search such as the breadth first search, depth first search, iterative deepening depth first search, uniform cost search and depth limited search.

First of all, for the problem solving, it needs to know about the situation. What is their goal and their environment. They need to know in which situation they have to respond and which they need to ignore. So knowledge about their surroundings have to be adequate. And, in their data, they should know about the state, the cost of the path, and the action in the path (right, left, up, down), and the number of steps (level of nodes) they should take.

Secondly, we learned about the difference of each search algorithm, each have it’s own advantages and weaknesses for the problem solving. As for breadth first search, it visit each node by their level. In depth first search, it visit node by their child and backtrack if their bottom child is not the goal and search for the other child. Uniform cost search, use priority queue, so it search the node by their smallest cost possible.

For the project, me and my group decided to make something about recognizing emotions using tensorflow. We might elaborate it into something not just an emotions recognition, however, we are still deciding and researching about it.

week 1

This week’s session is about introducing us to the concept of intelligent system and the upcoming project that need to be done by the end of this course.

First of all, the concept of machine learning and artificial intelligence are often confused. The concept is about a machine (artificial) that is intelligent enough to be a part of our daily life. The purpose of it’s existence is to make our everyday life and problem solving become more easier and to improve it to be better. Some of us may deem an AI to be a terminator that could lead to the doom of humanity. However, the concept of machine learning is to train the AI to have common sense same as human to prevent that from happening.

Secondly, the process of training an AI need a lot of variable and factor that we need to know, such as the environment. It is necessary that the machines in each environment to know what they are doing and purpose for being there. And in case of something happened or appeared, they need to have the rationality and human common sense to deal the situation.

As for the upcoming project, me and my group have yet to discuss and decide about the topic that we should be making.